Quick Answer: To check if a page is indexed on Google, use the site: search operator (type "site:yoururl.com/page" in Google), inspect the URL in Google Search Console, check the Page Indexing Report, or use SEOmator's free crawl test tool. If no results appear, your page isn't indexed and you'll need to troubleshoot common issues like robots.txt blocks, noindex tags, or crawl errors.

Understanding the indexing status of your pages helps you identify potential issues and improve your site's visibility on the web. In my 7+ years of SEO experience, I've seen countless pages remain invisible to searchers simply because they weren't properly indexed. Let me walk you through several reliable methods to check if a page is indexed.

What is Indexing?

In SEO parlance, indexing refers to the process where search engines like Google collect and store information about web pages. I like to think of it as "Google reading a book (web page) and storing it on a shelf (Index) for future reference."

In real terms, Google sends out "spiders"—also known as bots or crawlers—to visit and scan web pages to understand and save the information they contain. This data is then stored in Google's database, called the Index.

Indexing is the process through which search engines discover, analyze, and store information from a web page in their database. Once a page is indexed, it becomes eligible to appear in search engine results when relevant queries are made.

Here's how indexing works:

Crawling: Search engine bots, also known as spiders or crawlers, scan the content of web pages by following links from one page to another, discovering new or updated content.

Analysis: After crawling a page, the search engine evaluates its content, including text, images, and metadata, to determine what the page is about and how it might be relevant to users.

Storing: The page's information is then stored in the search engine's index, which acts as a massive database containing details about billions of web pages.

Ranking: When a user performs a search, the search engine uses its index to identify the most relevant and useful pages, ranking them based on their content and authority.

If a page isn't indexed, it won't show up in search results, meaning it won't be discoverable through search engines. Indexing is a key step in ensuring a website's visibility on the web.

Many SEO beginners often confuse indexing with crawling, but these are two distinct (yet related) processes. While crawling refers to Google's bots visiting your web page, indexing is when Google saves that web page in their system.

According to Google's own documentation, the search engine has indexed hundreds of billions of web pages and continues to grow. This further emphasizes the importance of making sure your page is properly indexed.

You may want to read: How Often Does Google Crawl a Site? - Factors & Ways to Learn

Why is Indexing Crucial for SEO?

When I first started out, I couldn't overemphasize the significance of indexing; it's like going to a library with no system to sort out books. That'd be chaotic, right?

That's how essential indexing is for SEO. Google uses indexing to sort and store web pages so they can be shown to users when they're looking for related information.

From my analysis of a decade's worth of SEO trends, I've noted that effective indexing directly correlates with better visibility and improved online ranking. Plus, it's a fundamental aspect of Google's ranking system which cannot be overlooked.

Here's why indexing is important for SEO:

Visibility in Search Results: If a page is not indexed, it won't show up in search results, no matter how well it's optimized. Indexing is the first step for a page to be found by search engines and displayed to users when they search for relevant keywords.

Improved Organic Traffic: Indexing ensures that your content is available for search engines to rank. Without indexing, your page cannot drive organic traffic because it won't be accessible to users searching for related topics.

Better Search Engine Rankings: Search engines use indexing to evaluate the content of your pages and determine how well they match users' search queries. Indexed pages can be ranked based on their relevance, authority, and quality. Pages that are indexed and optimized have the potential to rank higher, which leads to more visibility and traffic.

Content Updates and Freshness: When a search engine re-indexes your site, it ensures that the most current version of your page is included in the index. If your page changes or gets updated, indexing helps search engines recognize and reflect those changes in their results, which is crucial for staying competitive in the SERPs.

Tracking and Analytics: Indexed pages allow website owners and SEO professionals to track performance metrics like impressions, clicks, and rankings using tools. If a page isn't indexed, you won't be able to monitor or analyze its performance.

You may want to read: Does Google Index Subdomains?

4 Methods to Check if a Page is Indexed on Google

Having a page indexed by Google is like owning a golden ticket to your SEO success. However, not many people know the different ways to check if their page has been indexed by Google.

That's why, in this section, I'll break down 4 different methods to check if your page is indexed. Whether you're a seasoned SEO expert or a novice, there's something to learn for everyone:

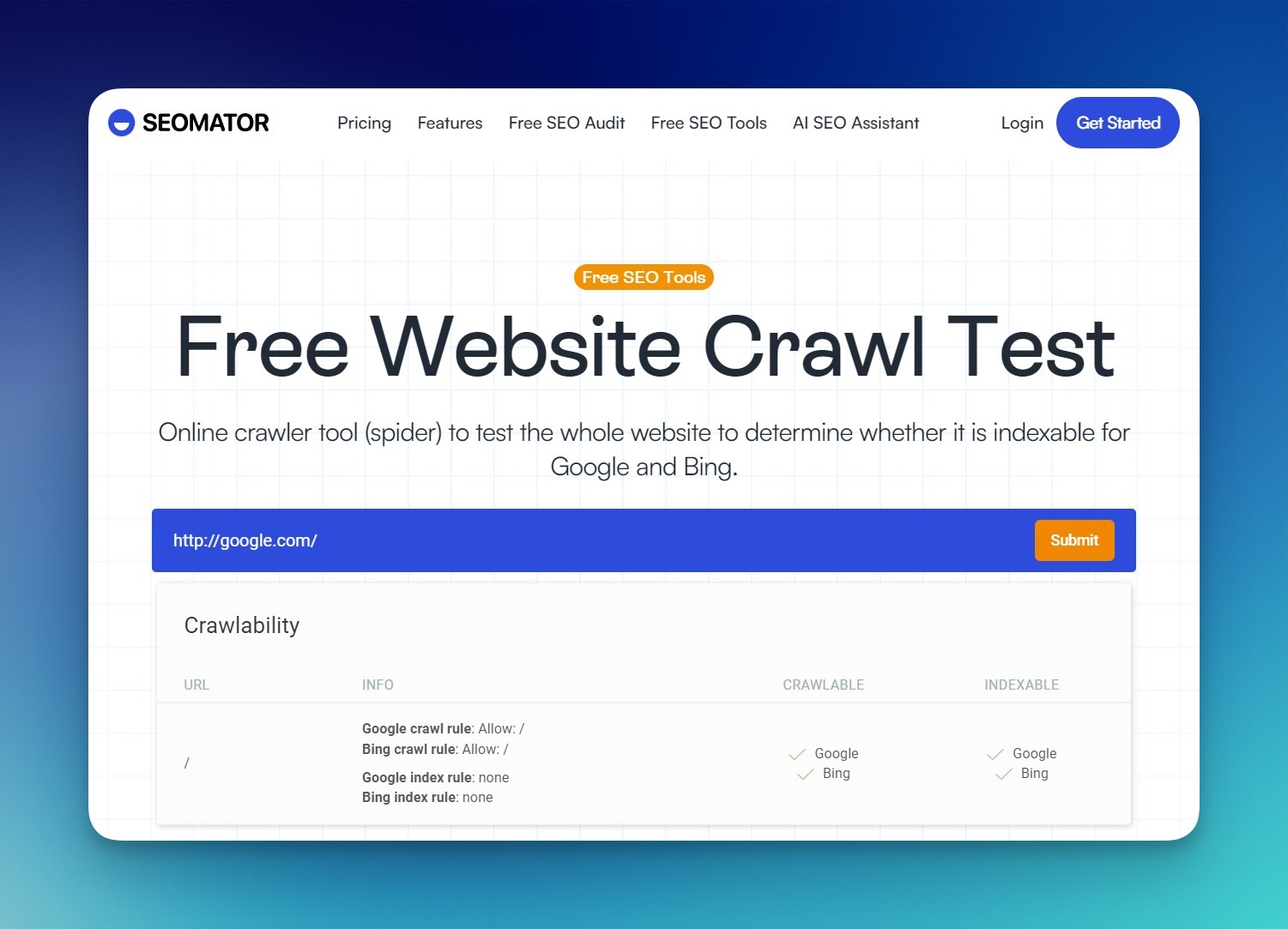

Method #1: SEOmator's Website Crawl Test

To see if a page is crawled and indexed on Google, you can leverage online tools. They can scan and index the content of web pages, just like search engines do, and provide insights into potential issues that might affect your website's performance in search engine rankings.

SEOmator's Free Website Crawl Test is a tool that allows website owners and SEO professionals to analyze and understand how search engine crawlers (like Googlebot) interact with a website.

This test simulates how search engines crawl a site, helping identify potential SEO issues that could affect indexing, performance, or visibility in search engine results.

Simply enter the full URL (including https://) of the site you want to analyze and click "Submit."

The crawl process might take a few minutes, depending on the size and complexity of your website. The tool will scan through the pages and gather information related to how well your website is structured for search engines. After the crawl is complete, it will generate a detailed report that outlines the findings. Once you identify the issues, you can take the necessary steps to fix them.

Regularly using this tool can help ensure your website is optimized for search engines, improving its chances of ranking well in search results.

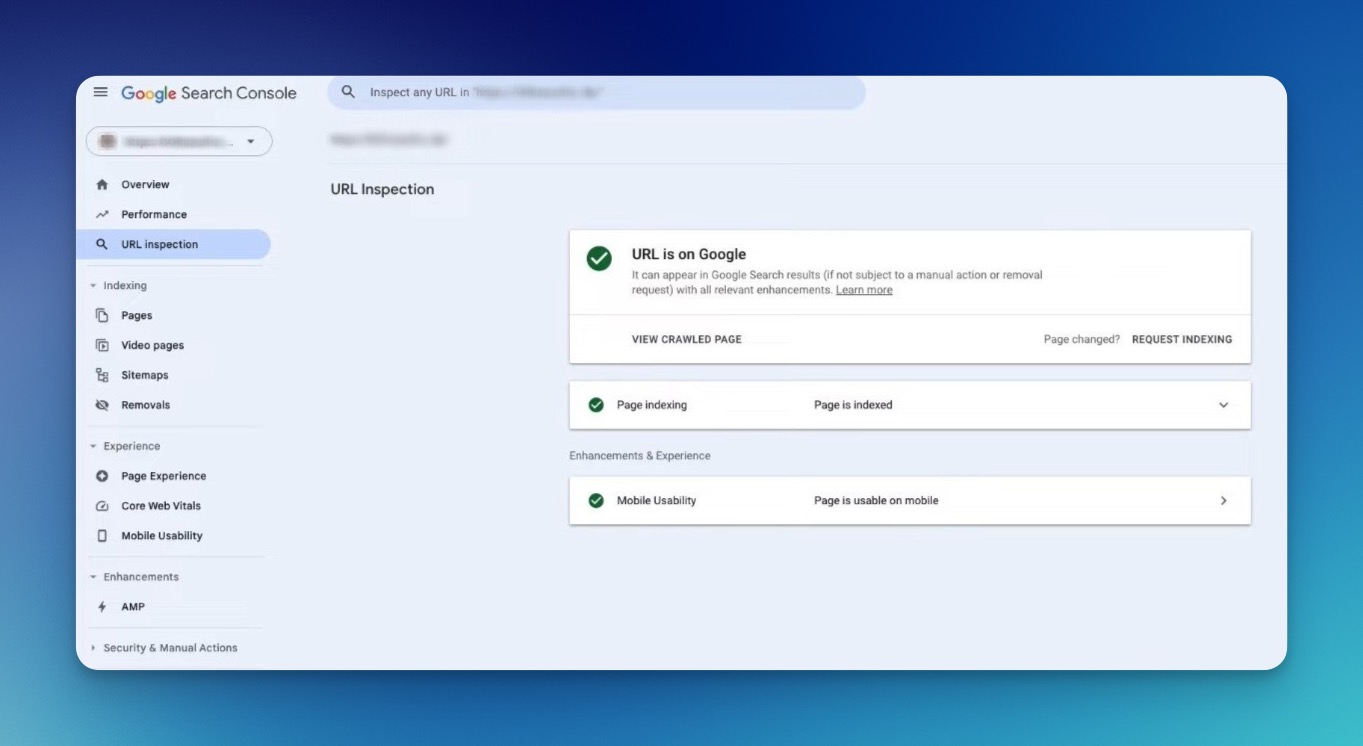

Method #2: The URL Inspection Tool in Google Search Console

The URL Inspection Tool in Google Search Console is one of the most reliable ways to check if a page is indexed. It helps you check whether a specific page of your website is indexed by Google, identify any crawl issues, and view how Googlebot sees that page.

Go to Google Search Console and log in with your Google account. If you haven't already added your website to Google Search Console, do so by following the verification steps.

Once logged in and in the Search Console dashboard, locate the left-hand menu. Click on "URL Inspection." This opens the tool where you can input specific URLs for inspection.

Enter the full URL (including https://) of the page you want to check in the search bar at the top. Click "Enter," and the tool will run a quick search.

If the page is indexed, you'll see a message like "URL is on Google," confirming that the page is in Google's index. If it's not indexed, you may see "URL is not on Google," along with a specific reason (e.g., noindex directive, robots.txt blocking, etc.).

If the page is indexed, you can click "View Crawled Page" to see details about how Googlebot sees the page, including its source code, metadata, and any potential errors that might affect indexing.

If the page has crawl issues, Google will provide a reason for why the page wasn't indexed, such as technical issues or restrictions in your robots.txt file.

However, remember to verify your website with Google Search Console before you can use the tool, as you can only inspect URLs from properties you own.

By regularly checking your pages using this tool, you can ensure that your content is visible in search results and resolve any technical problems affecting your site's performance.

You may want to read: How to Fix 'Crawled-Currently Not Indexed' Issue in GSC

Method #3: The Page Indexing Report in Google Search Console

The Page Indexing Report in Google Search Console provides insights into the status of your website's pages in Google's index. It helps you monitor how many of your pages are indexed, identify issues preventing indexing, and track the overall health of your site's presence in search results.

Go to Google Search Console. Log in with your Google account and select the website property you want to inspect. In the left-hand sidebar, click on the "Indexing" tab to expand the menu. Select "Pages" in the Indexing section.

The Page Indexing Report provides a broader overview of your website's indexing status on Google.

However, keep in mind that the data in this report may be slightly outdated compared to the real-time information you can obtain through the URL Inspection Tool. If you need the most up-to-date details about whether a page is indexed and when Google last crawled it, the URL Inspection Tool which I mentioned above is your best option.

Additionally, if you have a large website, be aware that the Page Indexing Report won't display a full list of unindexed pages if there are more than 1,000. This limitation can be bypassed by configuring your Search Console account.

You may want to read: How to Simply Check if a Page Has Noindex

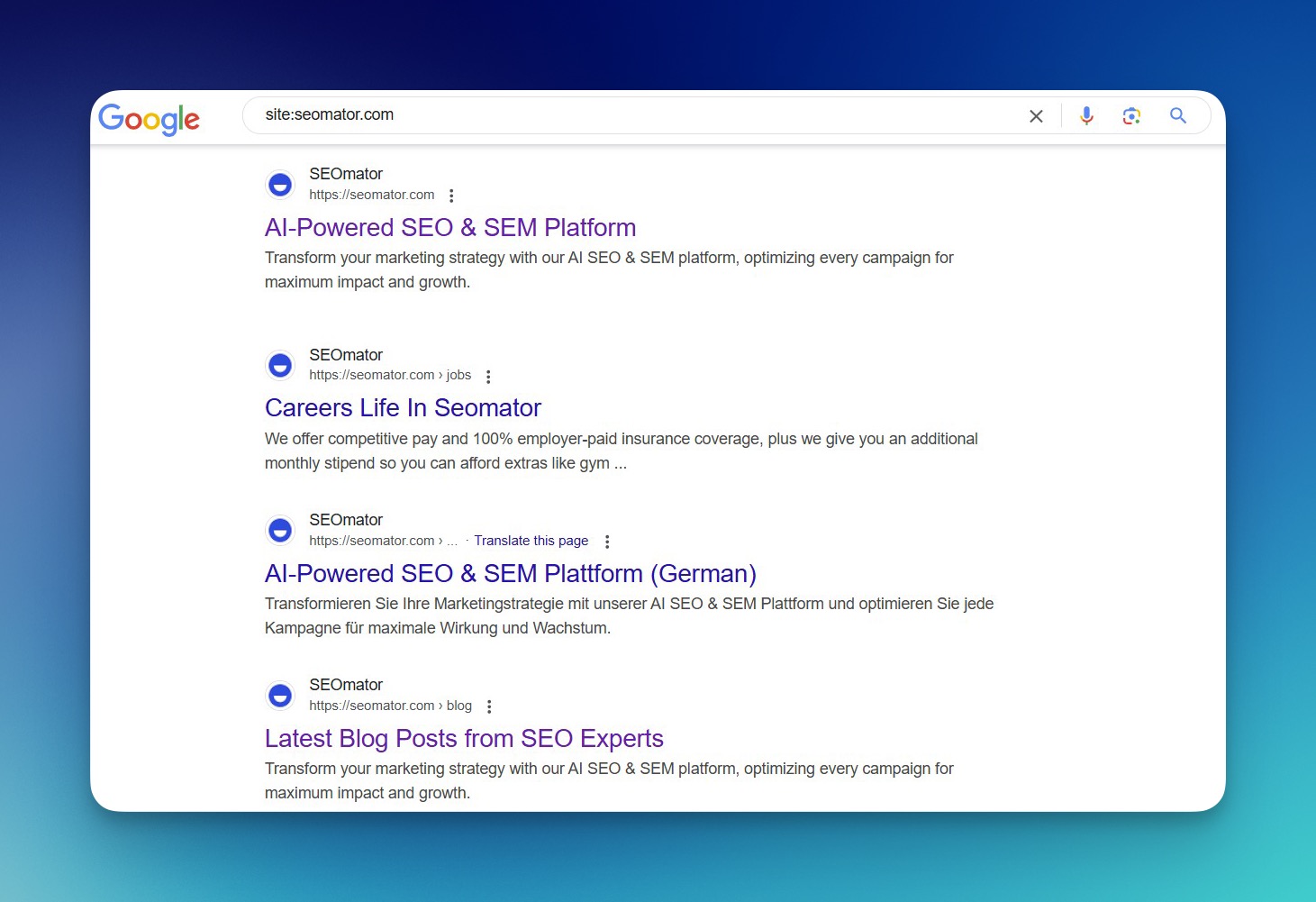

Method #4: The "site:" Command

The site: command is a simple yet effective way to check if a website or specific page is indexed by Google. This Google command is a bit old-school but still quite effective.

Go to the Google search engine homepage. In the search bar, type the command "site:" followed by the URL of your website or page.

It should look like this:

site:yourwebsite.com

If the site is indexed, Google will show a list of pages that are indexed from your domain. You can check how many pages are indexed by looking at the search results.

If no results are shown, this typically means that the website or page is not indexed. It could also indicate that there are no pages on the website that Google has crawled and indexed yet.

However, the number of results shown by Google may not be the exact count of indexed pages. It can be an estimate, and some pages might not appear even though they are indexed.

The command shows results based on Google's current index and may not reflect the latest status or changes, which is why the URL Inspection Tool in Google Search Console can provide more detailed and real-time information.

Also, some users confuse this check for their SEO ranking—assurance that all pages appear on Google doesn't mean they rank high in search results.

You may want to read: Google Search Operators Cheat Sheet with Examples [Table]

Why is Your Page Not Indexed?

If you've recently published content on your website and noticed that it's not appearing in search engine results, you're not alone. One of the most common SEO challenges website owners face is figuring out why their pages aren't indexed by search engines.

Over the years, I've encountered numerous reasons why a page may not be indexed by Google. While some reasons are technical, others are as simple as over-optimization or keyword stuffing.

Understanding the causes of non-indexation is the first step toward resolving the issue and improving your site's overall visibility and SEO performance.

Here are some common culprits:

Robots.txt File Blocking: This is one of the most common issues. A robots.txt file, if enabled incorrectly, can prevent Google's spider from crawling and indexing your page.

Incorrect Meta Robots Tag: An incorrect meta robots tag could completely block a page from being indexed. If set to "noindex," search engine bots won't index that page.

Non-Canonical URLs: If your page has a non-canonical URL or has been tagged as non-canonical by mistake, Google won't index it.

Duplicate Content: If Google detects significant duplicated content on your page, it may choose not to index it.

Poorly Written Content: Poorly written or thin content with limited value can also prevent indexing.

Slow Page Speed: If your page takes a significant time to load, Google might skip it during crawling, preventing it from being indexed.

Crawl Errors: Any crawl errors like "500 Internal Server Error" can break the Google bot's crawling process and prevent a page from being indexed.

These are just a few examples of issues.

Start by assessing these common issues, keep track and document everything as this can give valuable insights into your website's performance and areas for improvement.

What Can You Do If Your Page is Not Indexed?

Discovering that a page on your website isn't indexed by search engines can be frustrating, especially after putting time and effort into creating quality content.

After identifying the potential reasons why a page might not be indexed, the next step naturally is troubleshooting. The process might take a bit of time but worry not—patience and persistence are SEO's best companions.

The first step is always to verify if the page is indeed not indexed. You can use the methods I've discussed earlier.

Once confirmed, here are steps you can follow:

Check Robots.txt: A bot being blocked by your robots.txt file is a pretty straightforward issue to resolve. Find the file in your root directory and check if it's disallowing or blocking Google.

Analyze Meta Tags: Check your page's HTML source code for any "noindex" or "nofollow" meta tags. If they're present, more than likely, they're blocking your page from being indexed.

Examine Sitemap: Ensure your sitemap is updated and registered with Google Search Console. Incorrect or missing sitemaps can lead to indexing issues.

Look for Duplicate Content: Check to see if your content is duplicated on other pages or websites.

Inspect Canonical Tags: If your page has a canonical tag pointing to a different page, it might cause indexing issues.

Re-submit for Indexing: Once you've made sure there are no issues blocking the crawler, you can manually re-submit your page for indexing using Google Search Console's URL Inspection Tool.

Improve the Quality of Your Page: If your page offers little to no value or contains thin content, consider improving the quality and richness of your page's content. Google's ultimate goal is to provide high-quality, relevant content to its users.

Remove Unwanted Outbound Links: Too many outbound links, especially to spam or low-quality content, can decrease the quality of your page in the eyes of Google, causing indexing issues.

Fix Load Times: If your page takes too long to load, work on improving its speed.

Implement Proper Server Settings: A page with a 500 server error needs to be mistake-free. These are basic HTTPS problems that need to be resolved for better indexing and crawling.

Don't forget, it takes time for changes to register and for Google to crawl your site again.

Often, users expect immediate results after making these changes. However, it's key to remember that Google operates on its own timeline and these changes may take anywhere from a few days to a few weeks to reflect.

Frequently Asked Questions

How long does it take for Google to index a new page?

Google can index a new page anywhere from a few hours to several weeks, depending on factors like your site's crawl budget, how often it's updated, and whether you've submitted a sitemap. Submitting the URL through Google Search Console's URL Inspection Tool can speed up the process.

What's the difference between crawling and indexing?

Crawling is when Google's bots visit and scan your web page to discover its content. Indexing happens after crawling—it's when Google stores the page's information in its database so it can appear in search results. A page can be crawled but not indexed if Google decides the content isn't valuable enough.

Can I force Google to index my page?

You can't force Google to index your page, but you can request indexing through Google Search Console's URL Inspection Tool. This doesn't guarantee immediate indexing, but it puts your page in the queue for Googlebot to crawl.

Why would Google choose not to index a page?

Google may skip indexing pages with thin content, duplicate content, noindex tags, robots.txt blocks, slow load times, or low-quality signals. Focus on creating unique, valuable content and ensuring no technical barriers prevent crawling.

Key Takeaways

- Indexing is essential for search visibility—if your page isn't indexed, it won't appear in search results

- Use the site: operator for quick checks, Google Search Console for detailed insights

- Common indexing blockers include robots.txt restrictions, noindex tags, and duplicate content

- SEOmator's free crawl test tool provides an easy way to analyze how search engines see your site

- Regular indexing checks help maintain your site's SEO health and visibility

You may also want to read:

How to Prevent Bots from Crawling Your Site